Steps to start training your custom Tensorflow model in AWS SageMaker

Describe the most relevant steps to start training a custom algorithm in AWS SageMaker, showing how to deal with experiments and solving some of the problems when facing with custom models and SageMaker script mode on

- SageMaker Experiments, TensorFlow script mode training and restore checkpoint to resume training

- Amazon SageMaker Overview

- Problem description

- Data description

- Set up the environment

- Create an experiment and trial

- Construct a script for training

- Create a training job using the TensorFlow estimator

- Start the training job: fit

- Restore a training job and download the trained model

- Make some predictions

- Resume training from a checkpoint

- Delete the experiment

- References

SageMaker Experiments, TensorFlow script mode training and restore checkpoint to resume training

Some sections of this notebook has been inspired by the tutorial:

Sagemaker Python SDK Examples: tensorflow_script_mode_training_and_serving.ipynb

In this notebook we will describe the most relevant steps to start training a custom algorithm in AWS SageMaker, not using a custom container, showing how to deal with experiments and solving some of the problems when facing with custom models when using SageMaker script mode on. Some basics concepts on SageMaker will not be detailed in order to focus on the relevant concepts.

Following steps will be explained:

-

Create an Experiment and Trial to keep track of our experiments

-

Load the training data to our training instance

-

Create the scripts to train our custom model, a Transformer.

-

Create an Estimator to train our model in a Tensorflow 2.1 container in script mode

-

Create metric definitions to keep track of them in SageMaker

-

Download the trained model to make predictions

-

Resume training using the latest checkpoint from a previous training

Amazon SageMaker Overview

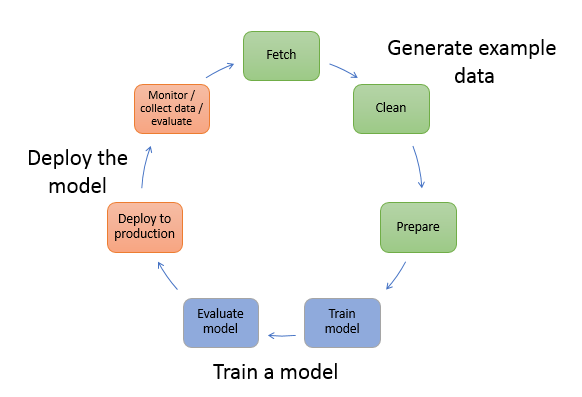

Amazon SageMaker is a fully managed machine learning service. With SageMaker, data scientists and developers can quickly and easily build and train machine learning models, and then directly deploy them into a production-ready hosted environment.

Amazon SageMaker Developer Guide

Amazon SageMaker provides many tools to help developers to manage the Machine Learning Lifecycle workflow:

- Fetch, Clean and transform the data: you can use SageMaker notebook instances to manipulate and analyze your data, then you can clean and transform it to the requiered format for your algorithm. And you can use Pipelines functionality to serve the data to your model during training.

- Train and evaluate the model: There are many different posibilities to train your model. You can use built-in algorithm, models provided by SageMaker, or you can use custom code to train in the most popular deep learning framewors (Tensorflow, Pytorch, Apache MXNet,..) or even use Apache Spark. Finally, you can use your own custom algorithm and build a Docker container then training the model on SageMaker. You can keep track of your model metrics to evaluate the performance.

- Deploy your model: Once your model is trained, you can deploy it in and endpoint service in SageMaker and make prediction one at a time or in batch mode.

A simple and popular way to get started and work with SageMaker is to use the Amazon SageMaker Python SDK. It provides Python APIs and containers that make it easy to train and deploy models in SageMaker, as well as examples for use with several different machine learning and deep learning frameworks.

Problem description

For this project we will develope notebooks and scripts to train a Transformer Tensorflow 2 model to solve a neural machine translation problem, traslating simple sentences from English to Spanish. This problem and the model is extensively described in my Mdeium post "Attention is all you need: Discovering the Transformer paper".

Data description

For this exercise, we’ll use pairs of simple sentences. The source text will be in English, and the target text will be in Spanish, from the Tatoeba project where people contribute, adding translations every day. This is the link to some translations in different languages. There you can download the Spanish/English spa_eng.zip file; it contains 124,457 pairs of sentences.

First, we will import and load the libraries to use in our project.

%load_ext autoreload

%autoreload 2

import os

import sagemaker

from sagemaker import get_execution_role

import pandas as pd

import numpy as np

import time

import pickle

import tensorflow as tf

# Create a SageMaker session to work with

sagemaker_session = sagemaker.Session()

# Get the role of our user and the region

role = get_execution_role()

region = sagemaker_session.boto_session.region_name

print(role)

print(region)

# Set the variables for data locations

data_folder_name='data'

train_filename = 'spa.txt'

non_breaking_en = 'nonbreaking_prefix.en'

non_breaking_es = 'nonbreaking_prefix.es'

# Set the directories for our nodel output

trainedmodel_path = 'trained_model'

output_data_path = 'output_data'

# Set the name of the artifacts that our model generate (model not included)

model_info_file = 'model_info.pth'

input_vocab_file = 'in_vocab.pkl'

output_vocab_file = 'out_vocab.pkl'

# Set the absolute path of the train data

train_file = os.path.abspath(os.path.join(data_folder_name, train_filename))

non_breaking_en_file = os.path.abspath(os.path.join(data_folder_name, non_breaking_en))

non_breaking_es_file = os.path.abspath(os.path.join(data_folder_name, non_breaking_es))

When working with Amazon SageMaker training jobs that will run on containers in a new instance or "vm", the data has to be share using a S3 Storage folder. For this purpose we define the bucket name and the folder names where our inputs and outputs will be stored. In our case we define:

- The training data URI: where our input data is located

- The output folder: where our training saves the outputs fron our model

- The checkpoint folder: where our model uploads the checkpoints

# Specify your bucket name

bucket_name = 'edumunozsala-ml-sagemaker'

# Set the training data folder in S3

training_folder = r'transformer-nmt/train'

# Set the output folder in S3

output_folder = r'transformer-nmt'

# Set the checkpoint in S3 folder for our model

ckpt_folder = r'transformer-nmt/ckpt'

training_data_uri = r's3://' + bucket_name + r'/' + training_folder

output_data_uri = r's3://' + bucket_name + r'/' + output_folder

ckpt_data_uri = r's3://' + bucket_name + r'/' + ckpt_folder

training_data_uri,output_data_uri,ckpt_data_uri

Then we can upload to the training data folder in S3 the files necessary for training: training data, non breaking prefixes for the inputs (English) and the non breaking prefixes for the outputs (Spanish). Once uploaded they can be loaded for training in the SageMaker container.

inputs = sagemaker_session.upload_data(train_file,

bucket=bucket_name,

key_prefix=training_folder)

sagemaker_session.upload_data(non_breaking_en_file,

bucket=bucket_name,

key_prefix=training_folder)

sagemaker_session.upload_data(non_breaking_es_file,

bucket=bucket_name,

key_prefix=training_folder)

Create an experiment and trial

Amazon SageMaker Experiments is a capability of Amazon SageMaker that lets you organize, track, compare, and evaluate your machine learning experiments.

Machine learning is an iterative process. You need to experiment with multiple combinations of data, algorithm and parameters, all the while observing the impact of incremental changes on model accuracy. Over time this iterative experimentation can result in thousands of model training runs and model versions. This makes it hard to track the best performing models and their input configurations. It’s also difficult to compare active experiments with past experiments to identify opportunities for further incremental improvements.

Experiments will help us to organize and manage all executions, metrics and results of a ML project.

# Install the library necessary to handle experiments

!pip install sagemaker-experiments

Load the libraries to handle experiments

# Import the libraries to work with Experiments in SageMaker

from smexperiments.experiment import Experiment

from smexperiments.trial import Trial

from smexperiments.trial_component import TrialComponent

Set the experiment and trial name and one tag to help us to identify the reason for this items.

# Set the experiment name

experiment_name='tf-transformer'

# Set the trial name

trial_name="{}-{}".format(experiment_name,'single-gpu')

tags = [{'Key': 'my-experiments', 'Value': 'transformerEngSpa1'}]

You can create an experiment to track all the model training iterations. Experiments are a great way to organize your data science work. You can create experiments to organize all your model development work for : a business use case you are addressing (e.g. create experiment named “customer churn prediction”), or a data science team that owns the experiment (e.g. create experiment named “marketing analytics experiment”), or a specific data science and ML project. Think of it as a “folder” for organizing your “files”.

We will create a Trial to track each training job run. But this is just a simple example, not intented to explore all the capabilities of the product.

# create the experiment if it doesn't exist

try:

training_experiment = Experiment.load(experiment_name=experiment_name)

print('Loaded experiment ',experiment_name)

except Exception as ex:

if "ResourceNotFound" in str(ex):

training_experiment = Experiment.create(experiment_name=experiment_name,

description = "Experiment to track trainings on my tensorflow Transformer Eng-Spa",

tags = tags)

print('Created experiment ',experiment_name)

# create the trial if it doesn't exist

try:

single_gpu_trial = Trial.load(trial_name=trial_name)

print('Loaded trial ',trial_name)

except Exception as ex:

if "ResourceNotFound" in str(ex):

single_gpu_trial = Trial.create(experiment_name=experiment_name,

trial_name= trial_name,

tags = tags)

print('Created trial ',trial_name)

Trackers

Another interesting tool to mention, is Tracker objects. They can store information about different types of topics or objects in our model or training process like inputs, parameters, artifacts or metrics. The tracker is attached to a trial, associating the object to the training job. We can record that information and analyze it later on the experiment. Note that only parameters, input artifacts, and output artifacts are saved to SageMaker. Metrics are saved to file.

As an example, we create a Tracker to register the input data and two parameters about how that data is processed in our project.

from smexperiments.tracker import Tracker

# Create the tracker for the inout data

tracker_name='TextPreprocessing'

trial_comp_name = None # Change to a an exsting TrialComponent to load it

try:

tracker = Tracker.load(trial_component_name=trial_comp_name)

print('Loaded Tracker ',tracker_name)

except Exception as ex:

tracker = Tracker.create(display_name=tracker_name)

tracker.log_input(name="EngtoSpa Translations", media_type="s3/uri", value=inputs)

tracker.log_parameters({

"Tokenizer": 'Subword',

"Max Length": 15,

})

print('Created Tracker ',tracker_name)

# Atach the Tracker to the trial

single_gpu_trial.add_trial_component(tracker.trial_component)

Our last step consist in create the experiment configuration, a dictionary that contains the experiment name, the trial name and the trial component and it will be used to label our training job.

# Create a configuration definition for our experiment and trial

trial_comp_name = 'single-gpu-components'

# Set the configuration parameters for the experiment

experiment_config = {'ExperimentName': training_experiment.experiment_name,

'TrialName': single_gpu_trial.trial_name,

'TrialComponentDisplayName': trial_comp_name}

Check and show information about the experiment and trial

print('Experiment: ',training_experiment.experiment_name)

# Show the trials in the experiment

#for trial in training_experiment.list_trials():

#print('Trial: ',trial.trial_name)

for trial_comp in TrialComponent.list(trial_name=single_gpu_trial.trial_name):

print('Trial Components: ',trial_comp)

Construct a script for training

Script mode is a training script format for TensorFlow that lets you execute any TensorFlow training script in SageMaker with minimal modification. The SageMaker Python SDK handles transferring your script to a SageMaker training instance. On the training instance, SageMaker's native TensorFlow support sets up training-related environment variables and executes your training script. In this tutorial, we use the SageMaker Python SDK to launch a training job.

Script mode supports training with a Python script, a Python module, or a shell script.

This project's training script was adapted from the Tensorflow model of a Transformer, we develop in a previous post (mentioned previously). We have modified it to handle:

-

the

train_file,non_breaking_inandnon_breaking_outparameters passed in with the values of the training data-set, the non breaking prefixes for the input data and the non breaking prefixes for the output data. -

the

data_dirparameter passed in by SageMaker with the value of the enviroment variableSM_CHANNEL_TRAINING. This is an S3 path used for input data sharing during training. -

the

model_dirparameter passed in by SageMaker. This is an S3 path which can be used for data sharing during distributed training and checkpointing and/or model persistence. We have also added an argument-parsing function to handle processing training-related variables. -

the local checkpoint path to store the model checkpoints during training. We use the default value

/opt/ml/checkpointsthat will be uploaded to S3. We comment this behavior later when defining our estimator. -

At the end of the training job we have added a step to export the trained model, only the weights, to the path stored in the environment variable

SM_MODEL_DIR, which always points to/opt/ml/model. This is critical because SageMaker uploads all the model artifacts in this folder to S3 at end of training. -

the

output_data_dirparameter passed in by SageMaker with the value of the enviroment variableSM_OUTPUT_DATA_DIR. This is a folder path used to save output data from our model. This folder will be uploaded to S3 to store the output.tar.zip. In our case we need to save the tokenizer for the input texts, the tokenizer for the outputs, the input and output vocab size and the tokens foreosandsos.

In addition to the train.py file, our source code folder includes the files:

- model.py: Tensorflow model definition

- utils.py: utility functions to process the text data

- utils_train.py: contains functions to calculate the loss and learning rate scheduler.

Here is the entire script for the train.py file:

!pygmentize 'train/train.py'

Our source code needs the tensorflow_dataset library and it is not include in the Tensorflow 2.1. container image provided by SageMaker. To solve this issue we explicitly install it in our train.py file using the command subprocess.check_call([sys.executable, "-q", "-m", "pip", "install", package]).

Create a training job using the TensorFlow estimator

The sagemaker.tensorflow.TensorFlow estimator handles locating the script mode container where the model will run, uploading your script or source code to a S3 location and creating a SageMaker training job. Let's call out a couple important parameters here:

-

source_dirandentry_point, the folder with the source code and the file to run the training. -

framework_versionis the tensorflow version we want to run our code. -

py_versionis set to'py3'to indicate that we are using script mode since legacy mode supports only Python 2. Though Python 2 will be deprecated soon, you can use script mode with Python 2 by settingpy_versionto'py2'andscript_modetoTrue. -

code_locationis a S3 folder URI where thesource_dirwill be upload. When the instace starts the content of that folder will be downloaded to a local path,opt/ml/code. Theentry_point, our main code or function, has to be included in that folder. -

output_pathis the S3 path where all the outputs of our training job will be uploaded when the training ends. In our example we will upload to this S3 folder the local content in the foldersSM_MODEL_DIRandSM_OUTPUT_DATA_DIR. - the

checkpoint_local_pathandcheckpoint_s3_uriparameters will be explained in the next section "Resume training from a checkpoint" -

script_mode = Trueto set script mode.

from sagemaker.tensorflow import TensorFlow

# Uncomment the type of instance to use

#instance_type='ml.m4.4xlarge'

instance_type='ml.p2.xlarge'

#instance_type='local'

Another important parameter of our Tensorflow estimator is the instance_type that is the type of "virtual machine" where the container will run. The values we play around in this project are:

- local: The container will run locally on the notebook instance. this is very useful to debug or verify that our estimator definition is correct and the train.py runs successfully. It is much more faster to run the container locally, the start up time for a remote instance is too long when you are coding and debugging.

- ml.mX.Yxlarge: It is a CPU instance, when you are running your code for a short train, maybe for validation purposes. Check AWS documentation for a list of alternative instance.

- ml.p2.xlarge: This instance use a GPU and it is the preferred one when you want to launch a long running training.

When running in local mode, some estimator functionalities are not available like uploading the checkpoints to S3 and its parameters should not be defined.

Finally we want to mention the definition of metrics. Using a dictionary, we can define a metric name and the regular expression to extract its value from the messages the training script writes on the logs or the stdout during training. Later we can see those metrics in the SageMaker console. We show you how to do it in a following section.

# Define the metrics to search for

metric_definitions = [{'Name': 'loss', 'Regex': 'Loss ([0-9\\.]+)'},{'Name': 'Accuracy', 'Regex': 'Accuracy ([0-9\\.]+)'}]

Now, we can define the estimator:

# Create the Tensorflow estimator using a Tensorflow 2.1 container

estimator = TensorFlow(entry_point='train.py',

source_dir="train",

role=role,

instance_count=1,

instance_type=instance_type,

framework_version='2.1.0',

py_version='py3',

output_path=output_data_uri,

code_location=output_data_uri,

base_job_name='tf-transformer',

script_mode= True,

#checkpoint_local_path = 'ckpt', #Use default value /opt/ml/checkpoint

checkpoint_s3_uri = ckpt_data_uri,

metric_definitions = metric_definitions,

hyperparameters={

'epochs': 8,

'nsamples': 60000,

'resume': False,

'train_file': 'spa.txt',

'non_breaking_in': 'nonbreaking_prefix.en',

'non_breaking_out': 'nonbreaking_prefix.es'

})

Start the training job: fit

To start a training job, we call estimator.fit method with the a few parameter values.

- An S3 location is used here as the input.

fitcreates a default channel named'training', which points to this S3 location. In the training script we can access the training data from the local location stored inSM_CHANNEL_TRAINING.fitaccepts a couple other types of input as well. See the API doc here for details. -

job_namethe name for the training job. -

experiment_configthe dictionary with the name of the experiment and trial to attach this job to.

When training starts, the TensorFlow container executes train.py, passing hyperparameters and model_dir from the estimator as script arguments. Because we didn't explicitly define it, model_dir defaults to s3://<DEFAULT_BUCKET>/<TRAINING_JOB_NAME>/model, so the script execution is as follows:

python train.py --model_dir s3://<DEFAULT_BUCKET>/<TRAINING_JOB_NAME>/model --epochs=1 --nsamples=5000 ...

When training is complete, the training job will upload the saved model and other output artifacts to S3.

# Set the job name and show it

job_name = '{}-{}'.format(trial_name,time.strftime("%Y-%m-%d-%H-%M-%S", time.gmtime()))

print(job_name)

Calling fit to train a model with TensorFlow 2.1 scroipt.

# Call the fit method to launch the training job

estimator.fit({'training':training_data_uri}, job_name = job_name,

experiment_config = experiment_config)

Save the experiment, then you can view it and its trials from SageMaker Studio

# Save the trial

single_gpu_trial.save()

# Save the experiment

training_experiment.save()

You can monitor the metrics that a training job emits in real time in the CloudWatch console:

- Open the CloudWatch console at https://console.aws.amazon.com/cloudwatch/.

- Choose Metrics, then choose /aws/sagemaker/TrainingJobs.

- Choose TrainingJobName.

- On the All metrics tab, choose the names of the training metrics that you want to monitor.

Another option is to monitor the metrics by using the SageMaker console.

- Open the SageMaker console at https://console.aws.amazon.com/sagemaker/.

- Choose Training jobs, then choose the training job whose metrics you want to see.

- Choose TrainingJobName.

- In the Monitor section, you can review the graphs of instance utilization and algorithm metrics

It is a simple way to check how your model is "learning" during the training stage.

Restore a training job and download the trained model

At this point, we have a trained model stored in S3. But we are interested in making some predictions with it.

After you train your model, you can deploy it using Amazon SageMaker to get predictions in any of the following ways:

- To set up a persistent endpoint to get one prediction at a time, use SageMaker hosting services.

- To get predictions for an entire dataset, use SageMaker batch transform.

But in this notebook we do not cover this feature because sometimes we are more interested in reloading our model in a new notebook to apply an evaluation method or study its parameters or gradients. So, here we are going to download the model artifacts from S3 and load them to an "empty" model instance.

If we have just trained a model using our estimator variable in this notebook execution, we can skip this step. But probably you trained your model for hours and now you need to restore your estimator variable from a previous training job. Check for the training job you want to restore the model in SageMaker console, copy the name and paste it in the next section of code. And then you call the attach method of the estimator object and now you can continue to work with our training job.

We can skip the next cell if the previous estimator.fit command was executed

from sagemaker.tensorflow import TensorFlow

# Set the training job you want to attach to the estimator object

# Use this option if the training job was not trained in this execution

my_training_job_name = 'tf-transformer-single-gpu-2020-11-12-18-36-15'

# In case, when the training job have been trained in this execution, we can retrive the data from the job_name variable

#my_training_job_name = job_name

# Attach the estimator to the selected training job

estimator = TensorFlow.attach(my_training_job_name)

# Set the job_name

job_name = estimator.latest_training_job.job_name

print('Job name where the model will be restored: ',estimator.latest_training_job.job_name)

print('Dir of model data: ',estimator.model_data)

print('Dir of output data: ',output_data_uri)

print('Buck name: ',bucket_name)

Download the trained model

The estimator object variable model_data points to the model.tar.gz file which contains the saved model. And the other output files from our model that we need to rebuild and tokenize or detokenize the sentences can be found in the S3 folder output_path/output/output.tar.gz. We can download both files and unzip them.

# Set the model and the output path in S3 to download the data

init_model_path = len('s3://')+len(bucket_name)+1

s3_model_path=estimator.model_data[init_model_path:]

s3_output_data=output_data_uri[init_model_path:]+'/{}/output/output.tar.gz'.format(job_name)

print('Dir to download traned model: ', s3_model_path)

print('Dir to download model outputs: ', s3_output_data)

sagemaker_session.download_data(trainedmodel_path,bucket_name,s3_model_path)

sagemaker_session.download_data(output_data_path,bucket_name,s3_output_data)

Next, extract the information out from the model.tar.gz file return by the training job in SageMaker:

!tar -zxvf $trainedmodel_path/model.tar.gz

Extract the files from output.tar.gz without recreating the directory structure, all files will be extracted to the working directory

!tar -xvzf $output_data_path/output.tar.gz #--strip-components=1

Import the tensorflow model and load the model

We import the model.py file with our model definition but we only have the weights of the model, so we need to rebuild it. The model parameters where saved during training in the model_info.pth, we just need to read that file and use the parameters to initiate an empty instance of the model. And then we can load the weights, load_weights() into that instance.

from train.model import Transformer

# Read the parameters from a dictionary

with open(model_info_file, 'rb') as f:

model_info = pickle.load(f)

print('Model parameters',model_info)

#Create an instance of the Transforer model and load the saved model to th

transformer = Transformer(vocab_size_enc=model_info['vocab_size_enc'],

vocab_size_dec=model_info['vocab_size_dec'],

d_model=model_info['d_model'],

n_layers=model_info['n_layers'],

FFN_units=model_info['ffn_dim'],

n_heads=model_info['n_heads'],

dropout_rate=model_info['drop_rate'])

#Load the saved model

# To do: Use variable to store the model name and pass it in as a hyperparameter of the estimator

transformer.load_weights('transformer')

Make some predictions

And now everything is ready to make prediction with our trained model:

- Import the

predict.pyfile with the functions to make a prediction and to translate a sentence. The code was described in the original post. - Read the files and load the tokenizer for the input and output sentences

- Call to

traslatefunction with the model, the tokenizers, thesosandeostokens, the sentence to translate and the max length of the output. It returns the predicted sentence detokenize, a plain text, with the translation.

# Install the library necessary to tokenize the sentences

!pip install tensorflow-datasets

from serve.predict import translate

import tensorflow_datasets as tfds

Load the input and output tokenizer or vocabularis used in the training. We need them to encode and decode the sentences

# Read the parameters from a dictionary

#model_info_path = os.path.join(model_dir, 'model_info.pth')

with open(input_vocab_file, 'rb') as f:

tokenizer_inputs = pickle.load(f)

with open(output_vocab_file, 'rb') as f:

tokenizer_outputs = pickle.load(f)

#Show some translations

sentence = "you should pay for it."

print("Input sentence: {}".format(sentence))

predicted_sentence = translate(transformer,sentence,tokenizer_inputs, tokenizer_outputs,15,model_info['sos_token_input'],

model_info['eos_token_input'],model_info['sos_token_output'],

model_info['eos_token_output'])

print("Output sentence: {}".format(predicted_sentence))

#Show some translations

sentence = "This is a really powerful method!"

print("Input sentence: {}".format(sentence))

predicted_sentence = translate(transformer,sentence,tokenizer_inputs, tokenizer_outputs,15,model_info['sos_token_input'],

model_info['eos_token_input'],model_info['sos_token_output'],

model_info['eos_token_output'])

print("Output sentence: {}".format(predicted_sentence))

Resume training from a checkpoint

Sometimes we need to stop our training, and maybe do some research in the performance or reallocate more resources to continue with the project. But when it is done, we need to resume the training, restoring the model and the optimizer states and continue for some more epochs to achieve a final trained model with a better performance.

To help with that scenario, the checkpoint_local_pathand checkpoint_s3_uri estimator parameters are much relevant. The first one is the local path, inside the container, that the algorithm writes its checkpoints to. SageMaker will persist all files under this path to checkpoint_s3_uri continually during training. On job startup the reverse happens - data from the s3 location is downloaded to this path before the algorithm is started. If the path is unset then SageMaker assumes the checkpoints will be provided under /opt/ml/checkpoints/. Using this feature we can resume training from the last checkpoint (or a previous one).

For this purpose, we set the model parameter resume = True and fit the estimator to execute another training.

Load the experiment and trial created in a previous run or create a new one:

# Set the experiment name

experiment_name='tf-transformer'

# Set the trial name

trial_name="{}-{}".format(experiment_name,'single-gpu')

trial_comp_name = 'single-gpu-training-job'

tags = [{'Key': 'my-experiments', 'Value': 'transformerEngSpa1'}]

# create the experiment if it doesn't exist

try:

experiment = Experiment.load(experiment_name=experiment_name)

print('Load the experiment')

except Exception as ex:

if "ResourceNotFound" in str(ex):

experiment = Experiment.create(experiment_name=experiment_name)

print('Create the experiment')

# create the trial if it doesn't exist

try:

trial = Trial.load(trial_name=trial_name)

print('Load the trial')

except Exception as ex:

if "ResourceNotFound" in str(ex):

trial = Trial.create(experiment_name=experiment_name, trial_name=trial_name)

print('Create the trial')

# Set the configuration parameters for the experiment

experiment_config = {'ExperimentName': experiment.experiment_name,

'TrialName': trial.trial_name,

'TrialComponentDisplayName': trial_comp_name}

Create an Estimator for a TensorFlow 2.1 model and set the parameter --resume to True to force the model to restore the latest checkpoint and resume training for the number of epochs selected

#instance_type='ml.m5.xlarge'

#instance_type='ml.m4.4xlarge'

instance_type='ml.p2.xlarge'

#instance_type='local'

# Define the metrics to search for

metric_definitions = [{'Name': 'loss', 'Regex': 'Loss ([0-9\\.]+)'},{'Name': 'Accuracy', 'Regex': 'Accuracy ([0-9\\.]+)'}]

# Create an estimator with the hyperparameter resume = True

estimator = TensorFlow(entry_point='train.py',

source_dir='train',

role=role,

instance_count=1,

instance_type=instance_type,

framework_version='2.1.0',

py_version='py3',

output_path=output_data_uri,

code_location=output_data_uri,

base_job_name='tf-transformer',

script_mode= True,

checkpoint_s3_uri = ckpt_data_uri,

metric_definitions = metric_definitions,

hyperparameters={

'epochs': 5,

'nsamples': 60000,

'resume': True,

'train_file': 'spa.txt',

'non_breaking_in': 'nonbreaking_prefix.en',

'non_breaking_out': 'nonbreaking_prefix.es'

})

# Set the job name and show it

job_name = '{}-{}'.format(trial_name,time.strftime("%Y-%m-%d-%H-%M-%S", time.gmtime()))

print(job_name)

# Fit or train the model from the latest checkpoint

estimator.fit({'training':training_data_uri}, job_name = job_name,

experiment_config = experiment_config)

And this training job will return a new trained model, you can download to make prediction as we describe in a former section.

training_experiment.delete_all(action="--force")

References

- Referencias for experiment and trial https://github.com/shashankprasanna/sagemaker-training-tutorial/blob/master/sagemaker-training-tutorial.ipynb